Trigger a pipeline based on SQS notifications

In Data Pipeline Studio you can use SQS notifications to automate tasks in a data pipeline or in a third-party tool, based on events like starting or stopping a pipeline run. You can consume events from a third-party tool to trigger a data pipeline. Let us understand the concept of consumer of events.

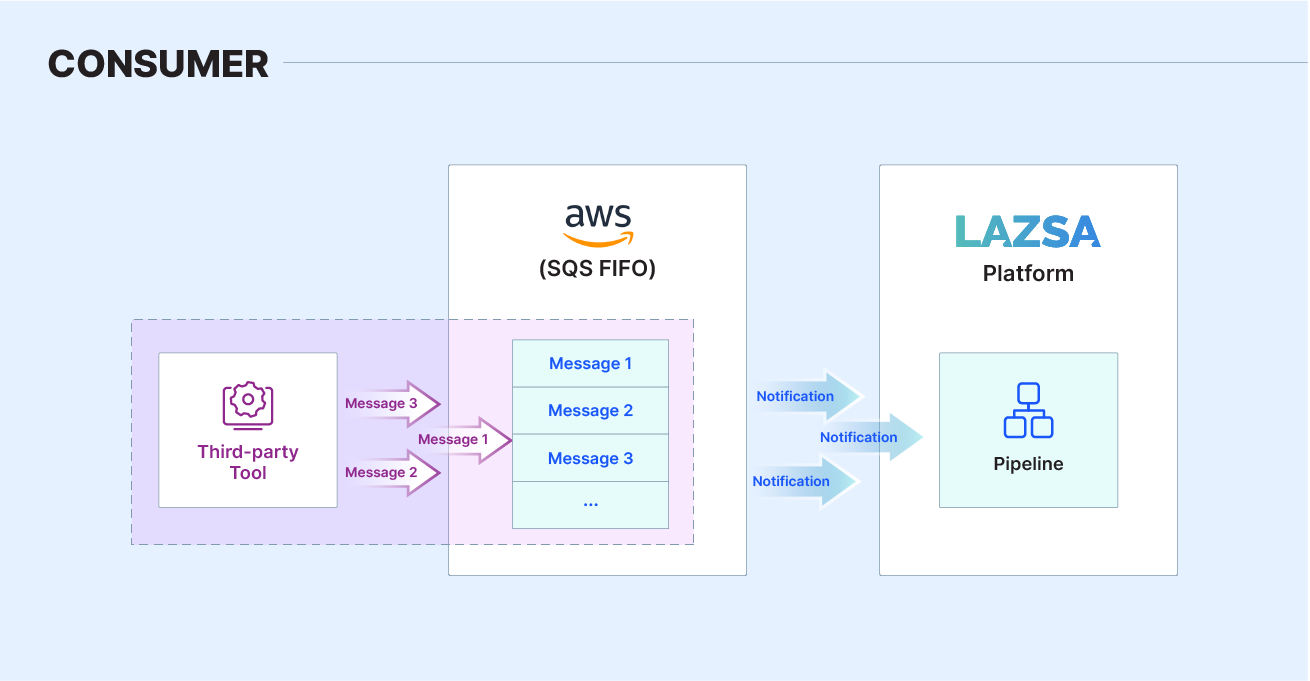

Consumer

A consumer is an element that listens to an SQS queue for events produced by a third-party tool or external process. The consumer triggers an action based on the events and the logic written for consuming the events.

In the above illustration the Calibo Accelerate platform is the consumer. It is listening to a specific queue of a configured SQS instance. Let us assume that a third-party tool is producing events that are sent to the SQS queue. Depending on the logic that you write to consume the event, you can initiate a pipeline run or terminate a pipeline run. In this example, the events are produced by a third-party tool, while they are consumed by the data pipeline.

To trigger an action by listening to an SQS queue for event notifications, you can configure SQS as a producer or consumer.

To configure SQS as a consumer

-

In Data Pipeline Studio, click the ellipsis (...).

-

Click Manage Notifications and then click the SQS tab.

-

On the SQS screen, click Event Consumer and do the following:

-

Enable Polling – Enable this option for polling. The polling frequency is set to 5 minutes.

Note:

For appropriate sequencing of events in the Calibo Accelerate platform, add FIFO queues instead of standard queues.

-

Configurations – select a configuration from the dropdown list.

-

Run Anyway - If the pipeline is currently running, stop the pipeline run and initiate the pipeline run again.

-

Copy the Sample JSON Request. You can use this JSON to trigger tasks or actions in a third-party tool. Currently we support the start or stop of the pipeline.

-

| What's next? More Options or Features of Data Pipeline Studio |